STEPS TO BECOME A SUCCESSFUL SELF-DRIVING CAR ENGINEER

Course 1: Introduction

Course 2: Computer Vision

Course 3: Deep Learning

Course 4: Sensor Fusion

Course 5: Localization

Course 6: Path Planning

Course 7: Control

Course 8: System Integration

Course 1: Introduction

In this course, you will learn about how self-driving cars work, and you’ll take a crack at your very first autonomous vehicle project - finding lane lines on the road! I'll also introduce you to the skills needed for becoming a successful Autonomous driving vehicle Engineer.

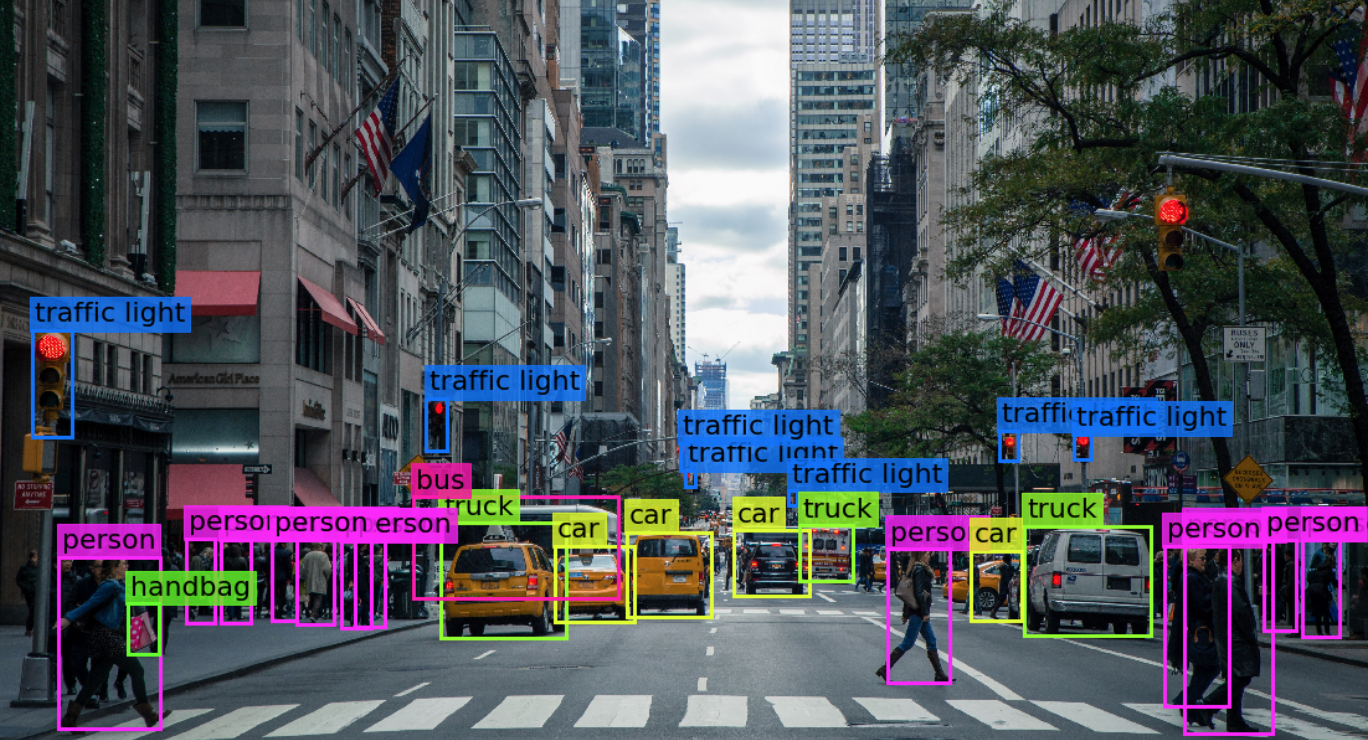

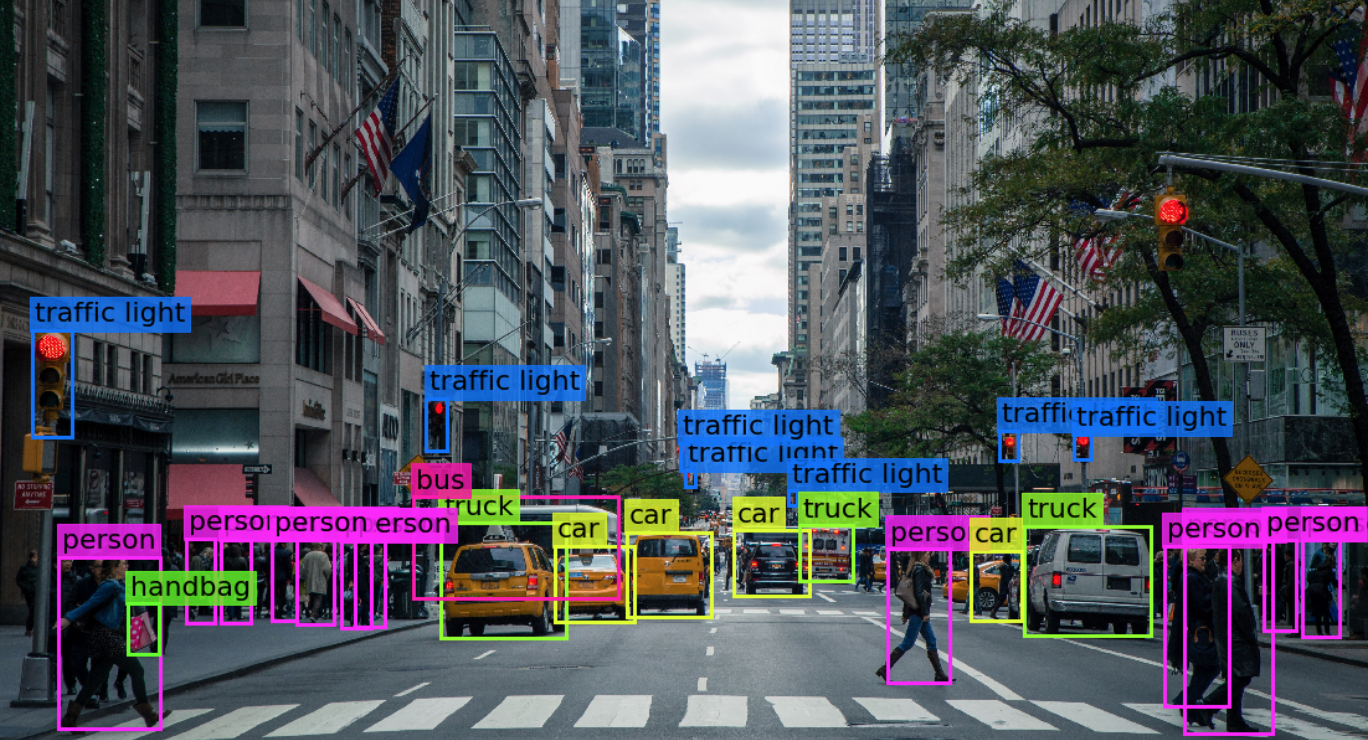

Course 2: Computer Vision

Computer vision is a field of computer science that works on enabling computers to see, identify and process images in the same way that human vision does, and then provide appropriate output. It is like imparting human intelligence and instincts to a computer. In reality though, it is a difficult task to enable computers to recognize images of different objects.

Computer vision is closely linked with artificial intelligence, as the computer must interpret what it sees, and then perform appropriate analysis or act accordingly.

Finding Lane Lines on a Road: Please visit this link for detailed info: https://github.com/naokishibuya/car-finding-lane-lines/blob/master/README.md

Advanced Computer Vision

Discover more advanced computer vision techniques, like distortion correction and gradient thresholding, to improve upon your lane lines algorithm!

Project 2: Advanced Lane Finding

Your goal is to write a software pipeline to identify the lane boundaries in a video from a front-facing camera on a car.

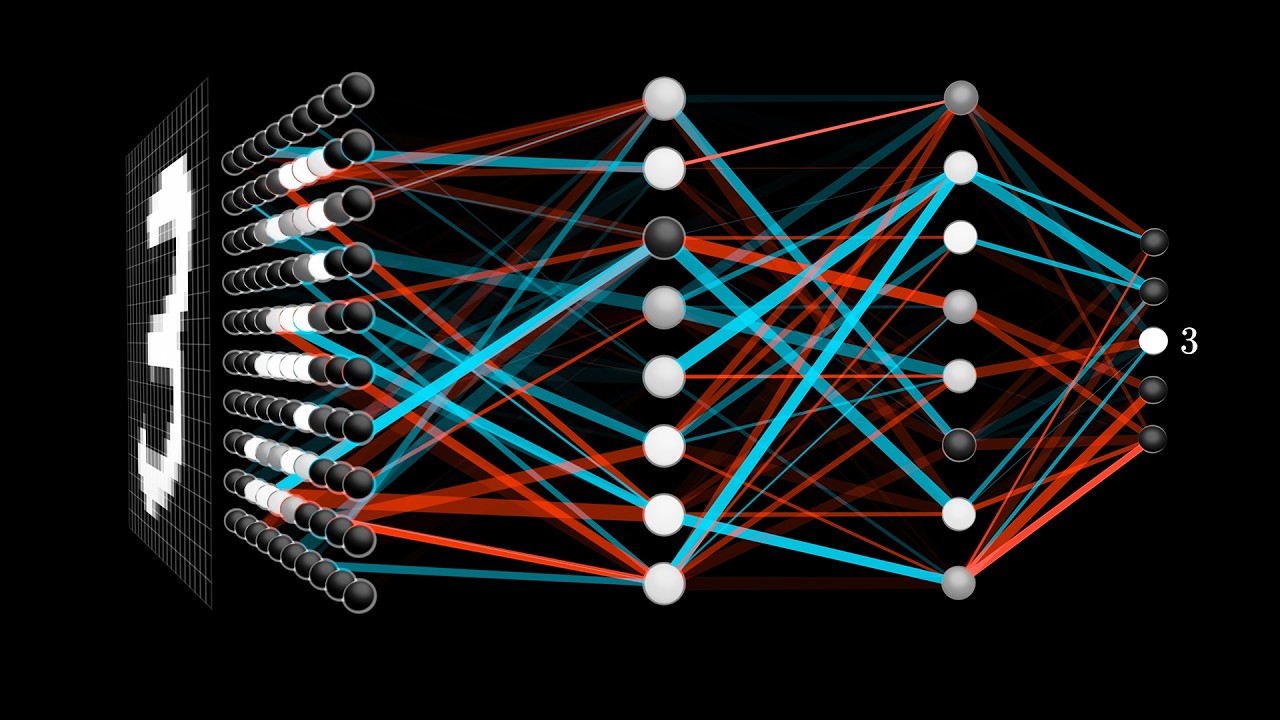

Course 3: Deep Learning

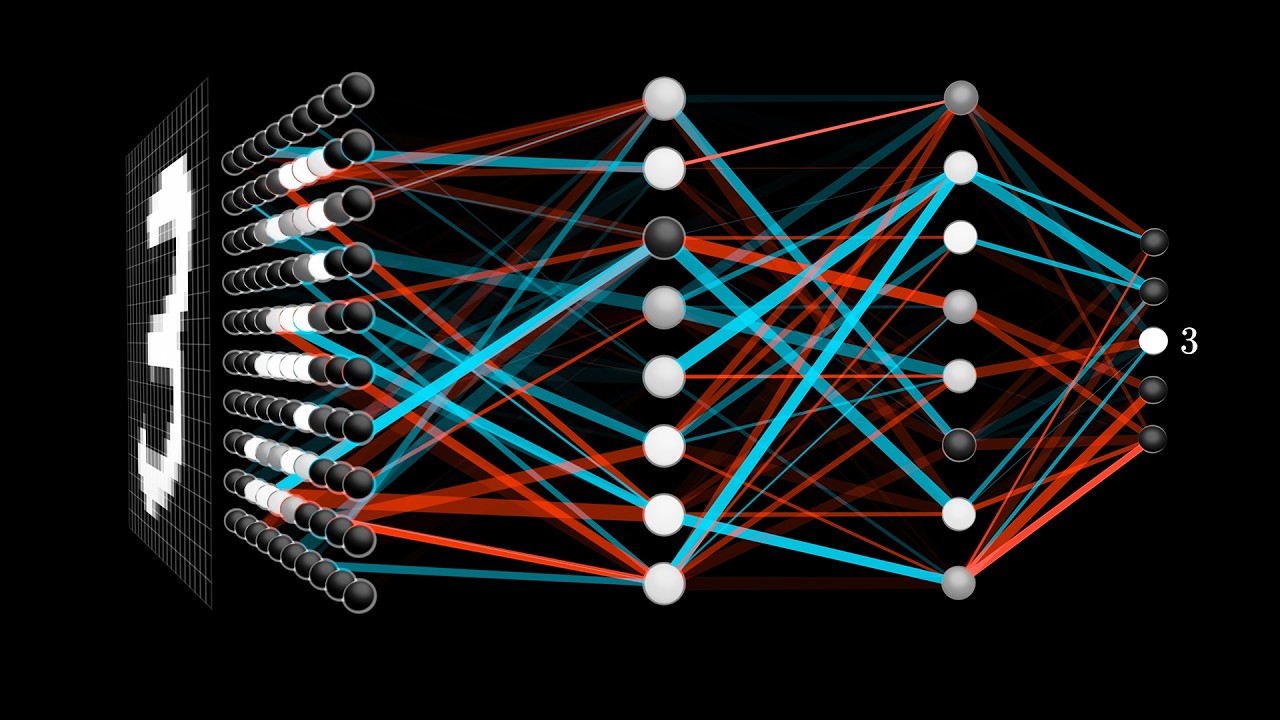

1. Neural Networks

Learn to build and train neural networks, starting with the foundations in linear and logistic regression, and culminating in backpropagation and multilayer perceptron networks.

2. TensorFlow

Vincent Vanhoucke, Principal Scientist at Google Brain, introduces you to deep learning and Tensorflow, Google's deep learning framework.

3. Deep Neural Networks

4. Convolutional Neural Networks (CNN)

Vincent explains the theory behind Convolutional Neural Networks and how they help us dramatically improve performance in image classification.

Project 3: Traffic Sign Classifier

You just finished getting your feet wet with deep learning. Now put your skills to the test by using deep

learning to classify different traffic signs! In this project, you will use what you've learned about deep

neural networks and convolutional neural networks to classify traffic signs.

5. Keras

Take on the neural network framework, Keras. You'll be amazed how few lines of code you'll need to build and train deep neural networks!

6. Transfer Learning

Learn about some of the most famous neural network architectures and how you can use them. By the end of this lesson, you'll know how to create new models by

leveraging existing canonical networks.

Project 4: Behavioral Cloning

Put your deep learning skills to the test with this project! Train a deep neural network to drive a car like you!

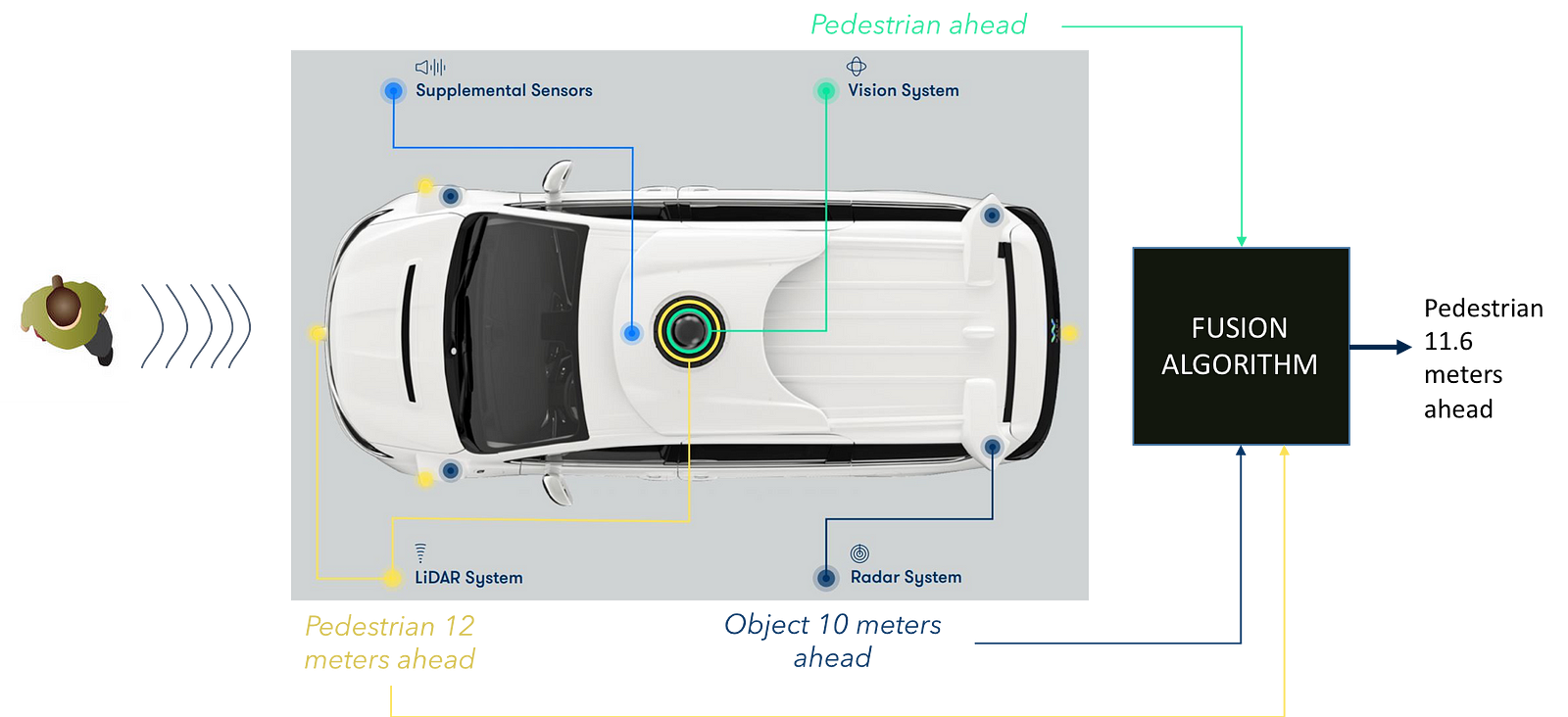

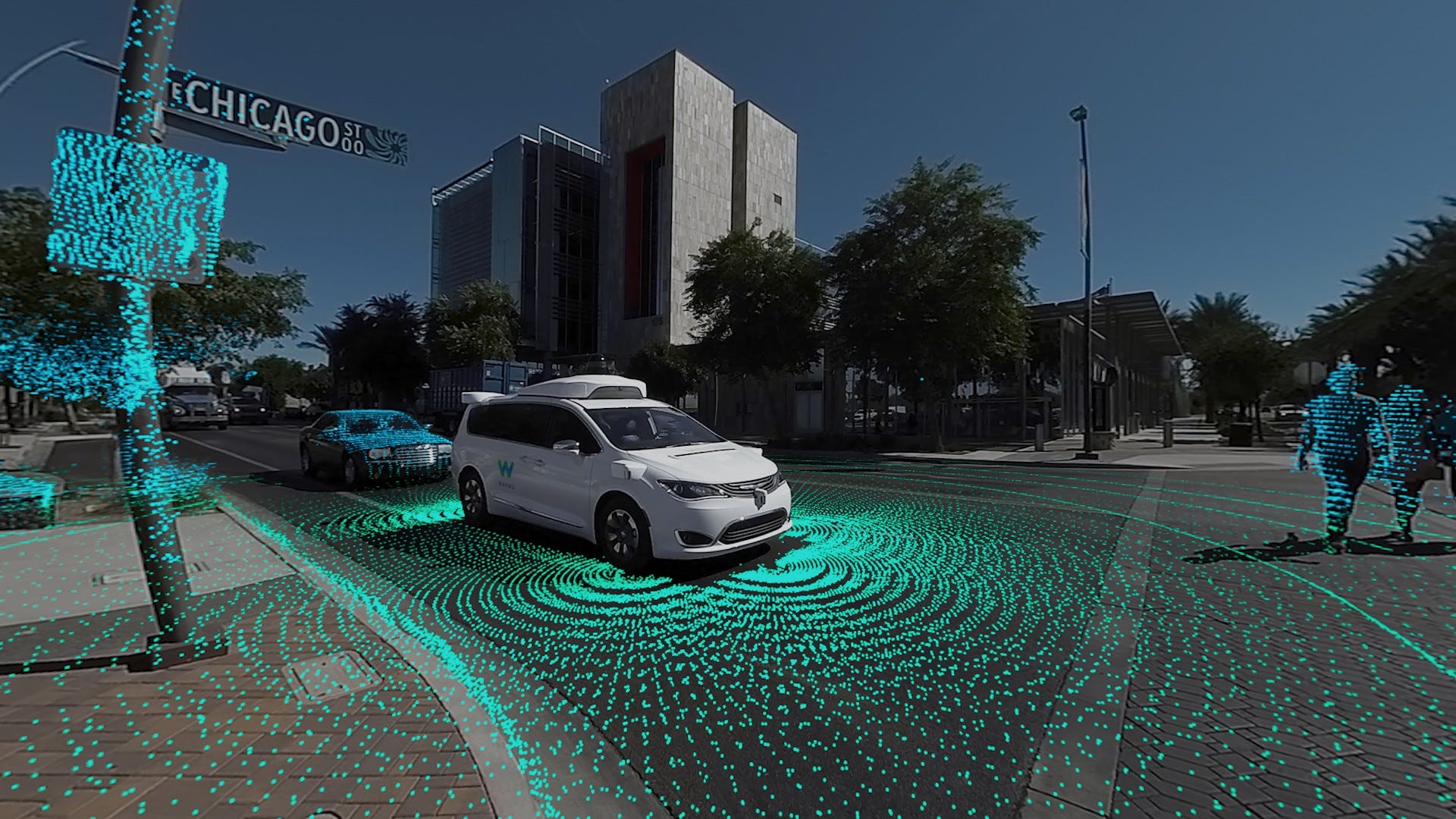

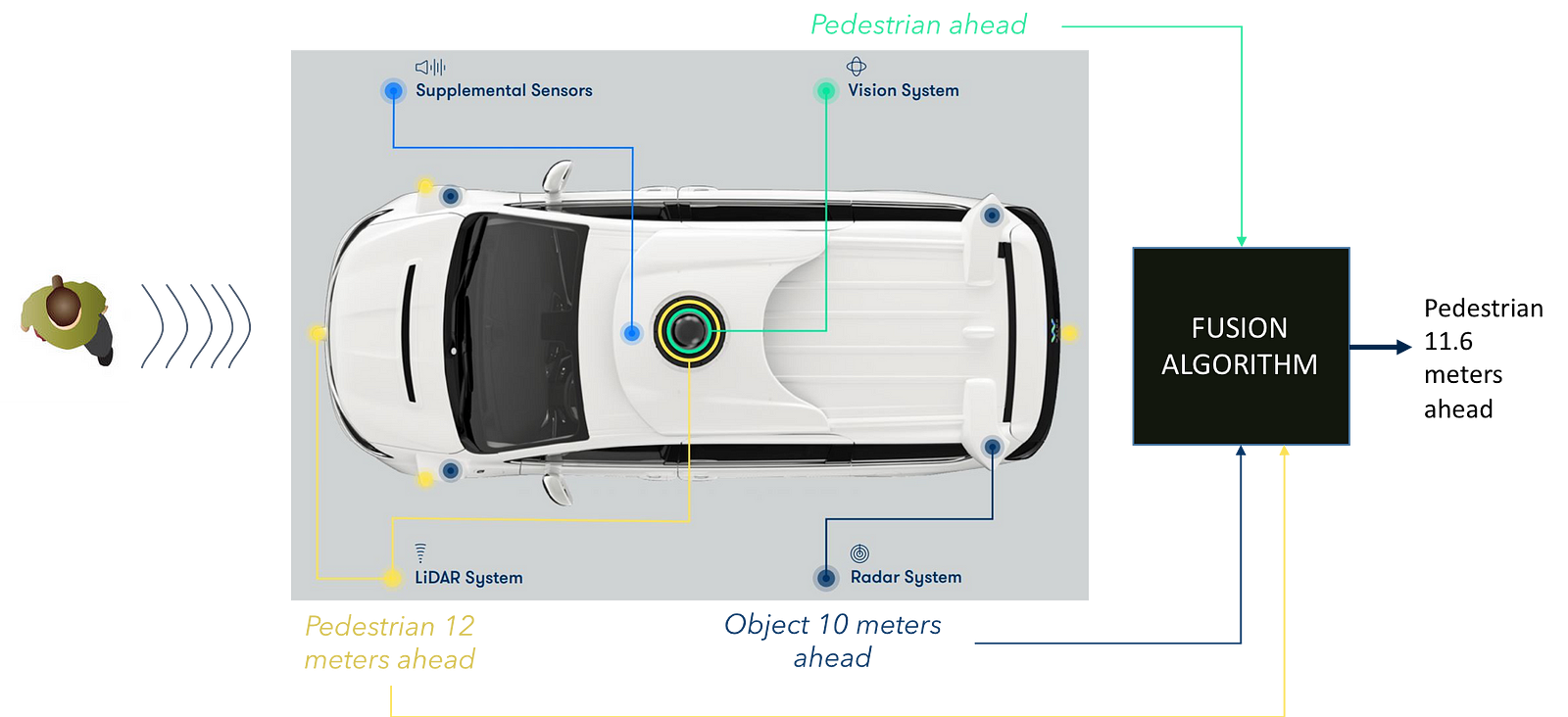

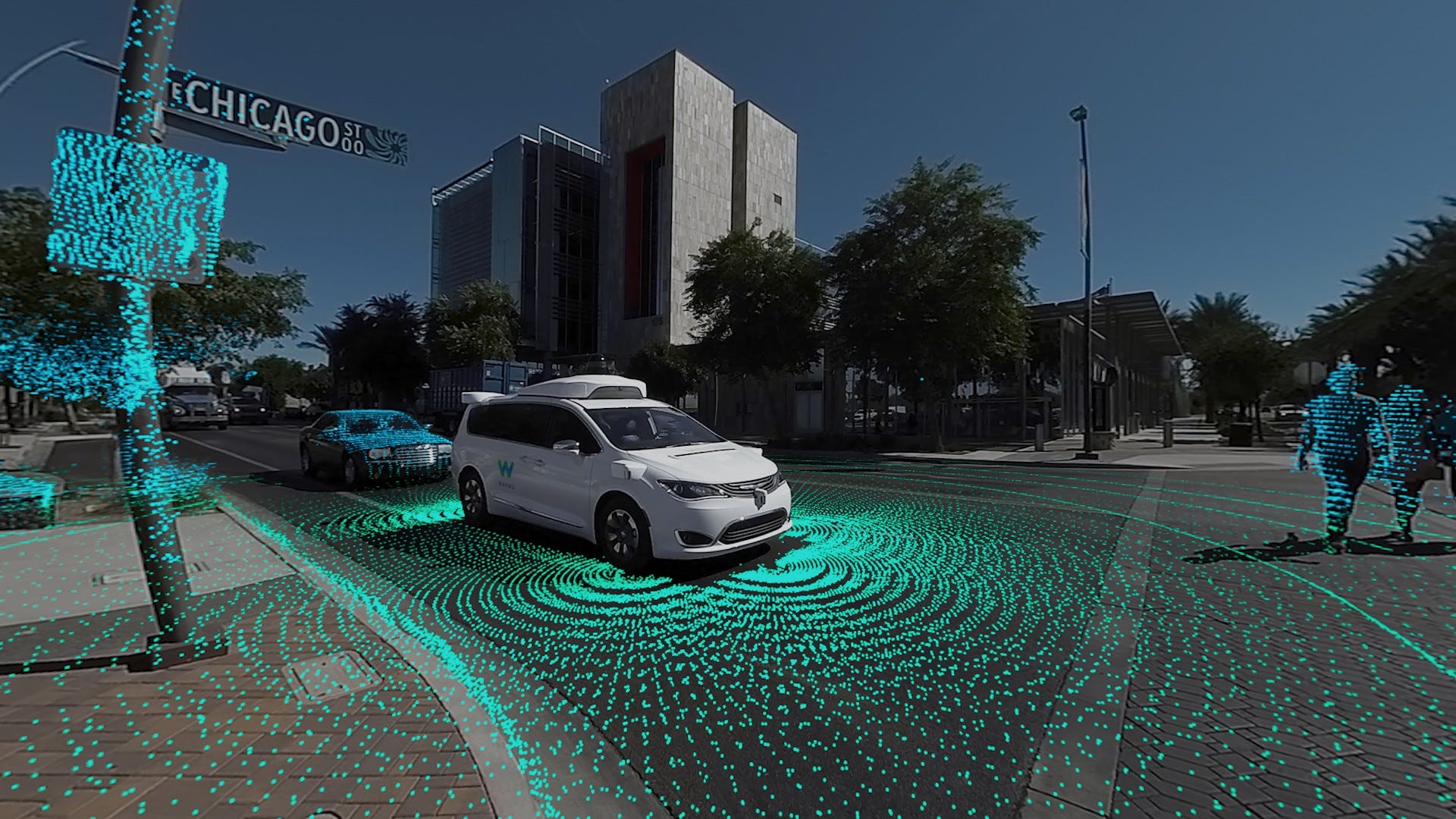

Course 4: Sensor Fusion

Tracking objects over time is a major challenge for understanding the environment surrounding a vehicle. Sensor fusion engineers from Mercedes-Benz will show you how to program fundamental mathematical tools called Kalman filters. These filters predict and determine with certainty the location of other vehicles on the road. You’ll even learn to do this with difficult-to-follow objects, by using an advanced technique: the extended Kalman filter.

1. Sensors

Meet the team at Mercedes who will help you track objects

in real-time with Sensor Fusion.

2. Kalman Filters

Learn from the best! Sebastian Thrun will walk you through

the usage and concepts of a Kalman Filter using Python.

3. C++ Checkpoint

4. Extended Kalman Filters

You'll build a Kalman Filter in C++ that's capable of handling data from multiple sources.

Why C++? Its performance enables the application of object tracking with a Kalman Filter in real-time.

Project 5: Extended Kalman Filters

In this project, you'll apply everything you've learned so far about Sensor Fusion by implementing an Extended Kalman Filter in C++!

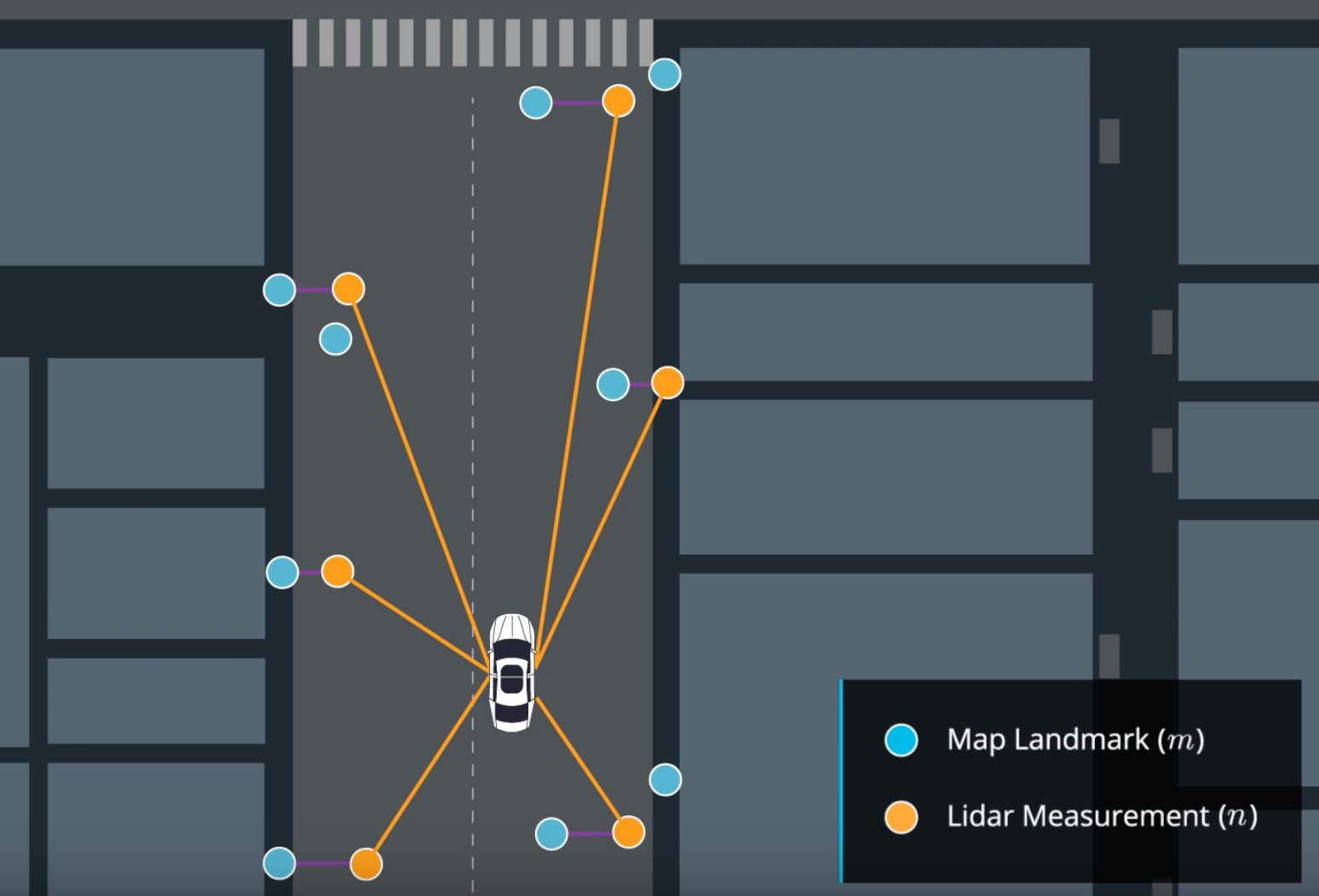

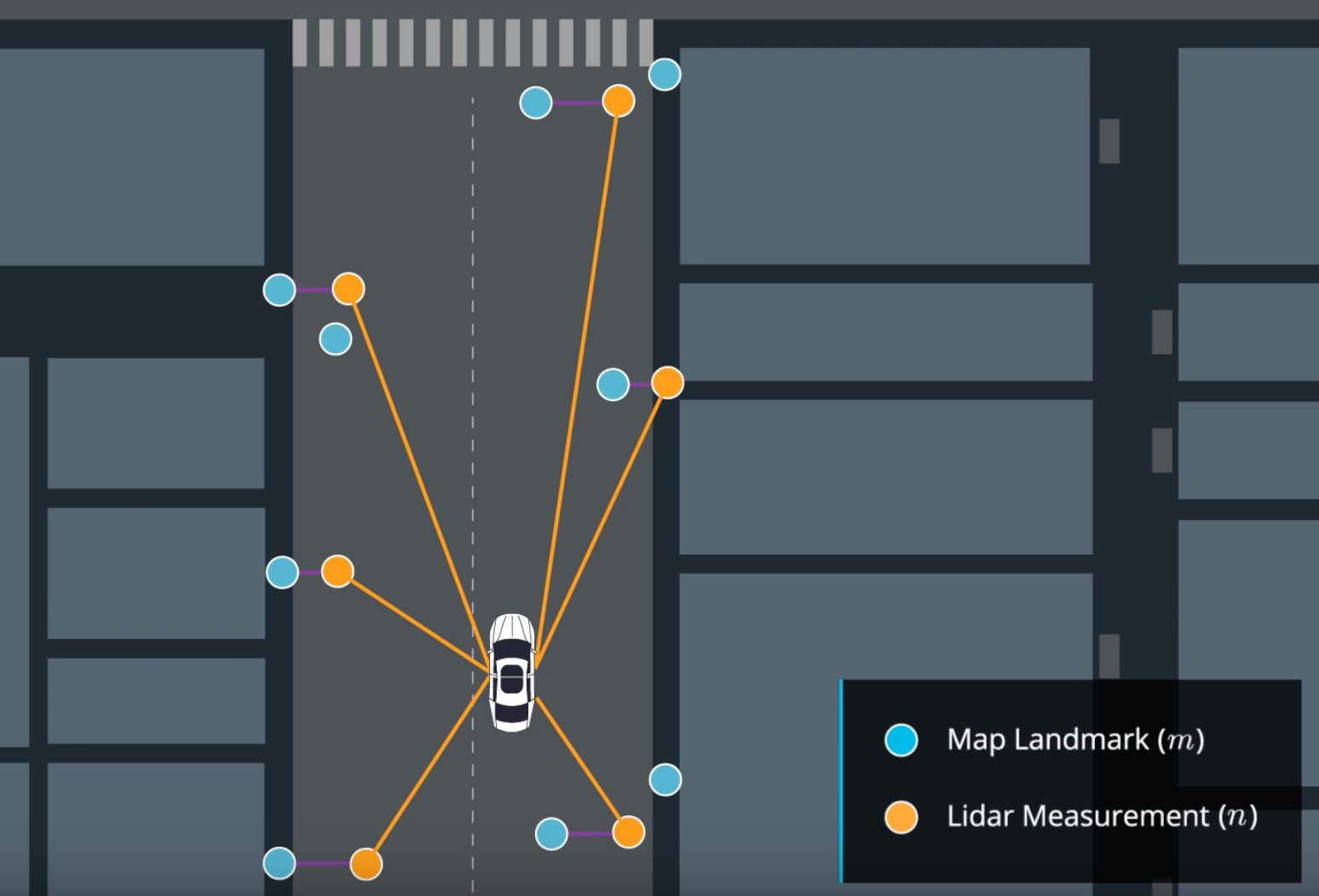

Course 5: Localization

Localization is how we determine where our vehicle is in the world. GPS is great, but it’s only accurate to within a few meters. We need single-digit centimeter-level accuracy! To achieve this, Mercedes-Benz engineers will demonstrate the principles of Markov localization to program a particle filter, which uses data and a map to determine the precise location of a vehicle.

1. Introduction to Localization

Localization is a step implemented in the majority of robots and vehicles to locate with a really small margin of error. If we want to make decisions like overtaking a vehicle or simply defining a route, we need to know what’s around us (sensor fusion) and where we are (localization). Only with this information we can define a trajectory.

2. Markov Localization

3. Motion Models

Here you'll learn about vehicle movement and motion models to predict where your car will be at a future time.

4. Particle Filters

Sebastian will teach you what a particle filter is as well as the theory and math behind the filter.

5. Implementation of a Particle Filter

Now that you understand how a particle filter works, you'll learn how to code a particle filter.

Project 6: Kidnapped Vehicle

In this project, you'll build a particle filter and combine it with a real map to localize a vehicle!

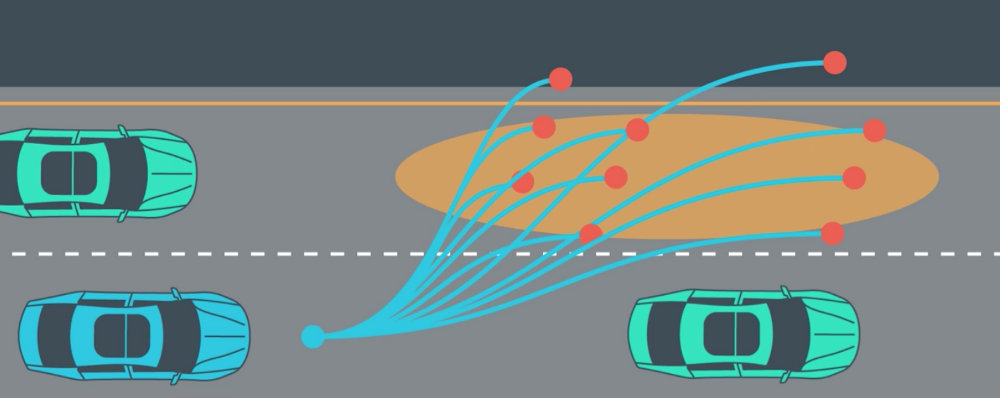

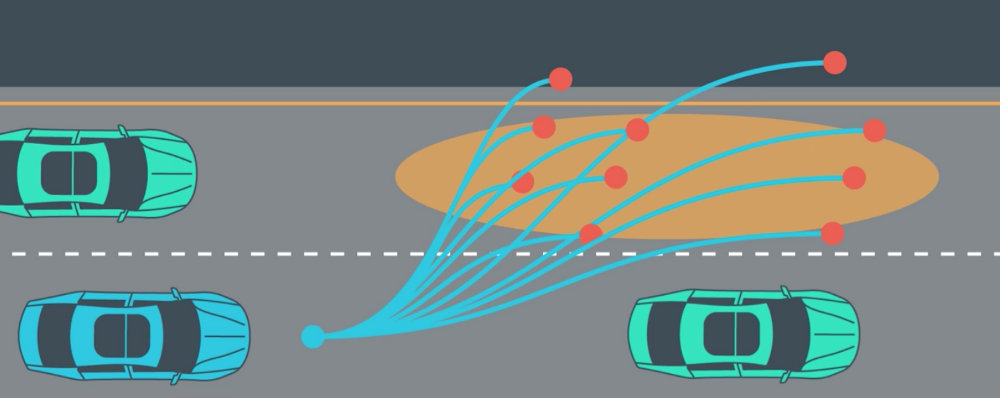

Course 6: Path Planning

Path planning routes a vehicle from one point to another, and it handles how to react when emergencies arise. The Mercedes-Benz Vehicle Intelligence team will take you through the three stages of path planning. First, you’ll apply model-driven and data-driven approaches to predict how other vehicles on the road will behave. Then you’ll construct a finite state machine to decide which of several maneuvers your own vehicle should undertake. Finally, you’ll generate a safe and comfortable trajectory to execute that maneuver.

1. Search

In this lesson you will learn about discrete path planning and algorithms for solving the path planning problem.

2. Prediction

In this lesson you'll learn how to use data from sensor fusion to generate predictions about the likely behavior of moving objects.

3. Behavior Planning

In this lesson you'll learn how to think about high level behavior planning in a self-driving car.

4. Trajectory Generation

In this lesson, you’ll use C++ and the Eigen linear algebra library to build candidate trajectories for the vehicle to follow.

Project 7: Highway Driving

Drive a car down a highway with other cars using your own path planner.

Course 7: Control

Ultimately, a self-driving car is still a car, and we need to send steering, throttle, and brake commands to move the car through the world. Uber ATG will walk you through building both proportional-integral-derivative (PID) controllers and model predictive controllers. Between these control algorithms, you’ll become familiar with both basic and advanced techniques for actuating a vehicle.

1. PID Control

In this lesson you'll learn about and how to use PID controllers with Sebastian!

Project 8: PID Controller

In this project you'll revisit the lake race track from the Behavioral Cloning Project. This time, however, you'll implement a PID controller in C++ to maneuver the vehicle around the track!

Project 9: Build Your Online Presence Using LinkedIn

Showcase your portfolio on LinkedIn and receive valuable feedback.

Project 10: Optimize Your GitHub Profile

Build a GitHub Profile on par with senior software engineers.

Course 8: System Integration

This is capstone of the entire Self-Driving Car Engineer Nanodegree Program! We’ll introduce Carla, the Udacity self-driving car, and the Robot Operating System that controls her. You’ll work with a team of other Nanodegree students to combine what you’ve learned over the course of the entire Nanodegree Program to drive Carla, a real self-driving car, around the Udacity test track!

1. Autonomous Vehicle Architecture

Learn about the system architecture for Carla, Udacity's autonomous vehicle.

2. Introduction to ROS

Obtain an architectural overview of the Robot Operating System Framework and setup your own ROS environment on your computer.

3. Packages and Catkin

Workspaces

Learn about ROS workspace structure, essential command line utilities, and how to manage software packages within a project. Harnessing these will be key to building shippable software using ROS.

4. Writing ROS Nodes

ROS Nodes are a key abstraction that allows a robot system to be built modularly. In this lesson, you’ll learn how to write them using Python.

Project 11: Programming a Real Self-Driving Car

Run your code on Carla, Udacity's autonomous vehicle!

For more information on Autonomous Driving Vehicle please visit https://www.nvidia.com/en-us/self-driving-cars/drive-platform/

Course 1: Introduction

Course 2: Computer Vision

Course 3: Deep Learning

Course 4: Sensor Fusion

Course 5: Localization

Course 6: Path Planning

Course 7: Control

Course 8: System Integration

Course 1: Introduction

In this course, you will learn about how self-driving cars work, and you’ll take a crack at your very first autonomous vehicle project - finding lane lines on the road! I'll also introduce you to the skills needed for becoming a successful Autonomous driving vehicle Engineer.

Course 2: Computer Vision

Computer vision is a field of computer science that works on enabling computers to see, identify and process images in the same way that human vision does, and then provide appropriate output. It is like imparting human intelligence and instincts to a computer. In reality though, it is a difficult task to enable computers to recognize images of different objects.

Computer vision is closely linked with artificial intelligence, as the computer must interpret what it sees, and then perform appropriate analysis or act accordingly.

Finding Lane Lines on a Road: Please visit this link for detailed info: https://github.com/naokishibuya/car-finding-lane-lines/blob/master/README.md

Advanced Computer Vision

Discover more advanced computer vision techniques, like distortion correction and gradient thresholding, to improve upon your lane lines algorithm!

Project 2: Advanced Lane Finding

Your goal is to write a software pipeline to identify the lane boundaries in a video from a front-facing camera on a car.

Course 3: Deep Learning

1. Neural Networks

Learn to build and train neural networks, starting with the foundations in linear and logistic regression, and culminating in backpropagation and multilayer perceptron networks.

2. TensorFlow

Vincent Vanhoucke, Principal Scientist at Google Brain, introduces you to deep learning and Tensorflow, Google's deep learning framework.

3. Deep Neural Networks

4. Convolutional Neural Networks (CNN)

Vincent explains the theory behind Convolutional Neural Networks and how they help us dramatically improve performance in image classification.

Project 3: Traffic Sign Classifier

You just finished getting your feet wet with deep learning. Now put your skills to the test by using deep

learning to classify different traffic signs! In this project, you will use what you've learned about deep

neural networks and convolutional neural networks to classify traffic signs.

5. Keras

Take on the neural network framework, Keras. You'll be amazed how few lines of code you'll need to build and train deep neural networks!

6. Transfer Learning

Learn about some of the most famous neural network architectures and how you can use them. By the end of this lesson, you'll know how to create new models by

leveraging existing canonical networks.

Project 4: Behavioral Cloning

Put your deep learning skills to the test with this project! Train a deep neural network to drive a car like you!

Course 4: Sensor Fusion

Tracking objects over time is a major challenge for understanding the environment surrounding a vehicle. Sensor fusion engineers from Mercedes-Benz will show you how to program fundamental mathematical tools called Kalman filters. These filters predict and determine with certainty the location of other vehicles on the road. You’ll even learn to do this with difficult-to-follow objects, by using an advanced technique: the extended Kalman filter.

1. Sensors

Meet the team at Mercedes who will help you track objects

in real-time with Sensor Fusion.

2. Kalman Filters

Learn from the best! Sebastian Thrun will walk you through

the usage and concepts of a Kalman Filter using Python.

3. C++ Checkpoint

4. Extended Kalman Filters

You'll build a Kalman Filter in C++ that's capable of handling data from multiple sources.

Why C++? Its performance enables the application of object tracking with a Kalman Filter in real-time.

Project 5: Extended Kalman Filters

In this project, you'll apply everything you've learned so far about Sensor Fusion by implementing an Extended Kalman Filter in C++!

Course 5: Localization

Localization is how we determine where our vehicle is in the world. GPS is great, but it’s only accurate to within a few meters. We need single-digit centimeter-level accuracy! To achieve this, Mercedes-Benz engineers will demonstrate the principles of Markov localization to program a particle filter, which uses data and a map to determine the precise location of a vehicle.

1. Introduction to Localization

Localization is a step implemented in the majority of robots and vehicles to locate with a really small margin of error. If we want to make decisions like overtaking a vehicle or simply defining a route, we need to know what’s around us (sensor fusion) and where we are (localization). Only with this information we can define a trajectory.

2. Markov Localization

3. Motion Models

Here you'll learn about vehicle movement and motion models to predict where your car will be at a future time.

4. Particle Filters

Sebastian will teach you what a particle filter is as well as the theory and math behind the filter.

5. Implementation of a Particle Filter

Now that you understand how a particle filter works, you'll learn how to code a particle filter.

Project 6: Kidnapped Vehicle

In this project, you'll build a particle filter and combine it with a real map to localize a vehicle!

Course 6: Path Planning

Path planning routes a vehicle from one point to another, and it handles how to react when emergencies arise. The Mercedes-Benz Vehicle Intelligence team will take you through the three stages of path planning. First, you’ll apply model-driven and data-driven approaches to predict how other vehicles on the road will behave. Then you’ll construct a finite state machine to decide which of several maneuvers your own vehicle should undertake. Finally, you’ll generate a safe and comfortable trajectory to execute that maneuver.

1. Search

In this lesson you will learn about discrete path planning and algorithms for solving the path planning problem.

2. Prediction

In this lesson you'll learn how to use data from sensor fusion to generate predictions about the likely behavior of moving objects.

3. Behavior Planning

In this lesson you'll learn how to think about high level behavior planning in a self-driving car.

4. Trajectory Generation

In this lesson, you’ll use C++ and the Eigen linear algebra library to build candidate trajectories for the vehicle to follow.

Project 7: Highway Driving

Drive a car down a highway with other cars using your own path planner.

Course 7: Control

Ultimately, a self-driving car is still a car, and we need to send steering, throttle, and brake commands to move the car through the world. Uber ATG will walk you through building both proportional-integral-derivative (PID) controllers and model predictive controllers. Between these control algorithms, you’ll become familiar with both basic and advanced techniques for actuating a vehicle.

1. PID Control

In this lesson you'll learn about and how to use PID controllers with Sebastian!

Project 8: PID Controller

In this project you'll revisit the lake race track from the Behavioral Cloning Project. This time, however, you'll implement a PID controller in C++ to maneuver the vehicle around the track!

Project 9: Build Your Online Presence Using LinkedIn

Showcase your portfolio on LinkedIn and receive valuable feedback.

Project 10: Optimize Your GitHub Profile

Build a GitHub Profile on par with senior software engineers.

Course 8: System Integration

This is capstone of the entire Self-Driving Car Engineer Nanodegree Program! We’ll introduce Carla, the Udacity self-driving car, and the Robot Operating System that controls her. You’ll work with a team of other Nanodegree students to combine what you’ve learned over the course of the entire Nanodegree Program to drive Carla, a real self-driving car, around the Udacity test track!

1. Autonomous Vehicle Architecture

Learn about the system architecture for Carla, Udacity's autonomous vehicle.

2. Introduction to ROS

Obtain an architectural overview of the Robot Operating System Framework and setup your own ROS environment on your computer.

3. Packages and Catkin

Workspaces

Learn about ROS workspace structure, essential command line utilities, and how to manage software packages within a project. Harnessing these will be key to building shippable software using ROS.

4. Writing ROS Nodes

ROS Nodes are a key abstraction that allows a robot system to be built modularly. In this lesson, you’ll learn how to write them using Python.

Project 11: Programming a Real Self-Driving Car

Run your code on Carla, Udacity's autonomous vehicle!

For more information on Autonomous Driving Vehicle please visit https://www.nvidia.com/en-us/self-driving-cars/drive-platform/

Nice .This blog giving a lot information related to autonomous vehicle. You did a wonderful job👍. I wish you all the best.

ReplyDeleteThanks. This is just an overview on how to make a career in autonomous driving industry. Will keep updating more. Stay updated.

DeleteYeah sure 👍

Delete